Visualizing Stammering Through the Lens of

Neuroscience & Artificial Intelligence

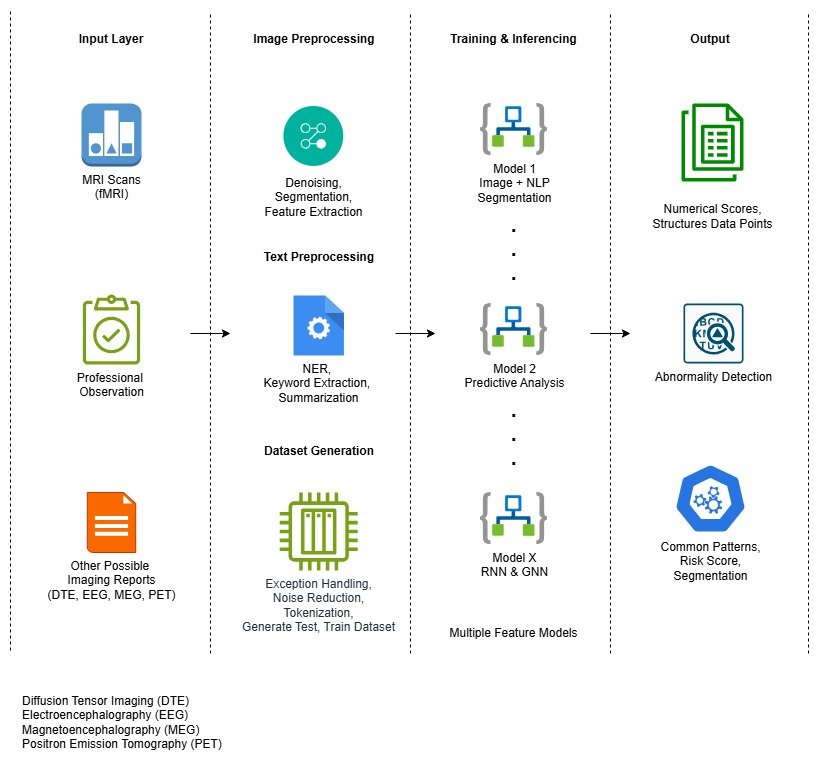

Understanding speech disfluencies like stammering or any other neurodevelopmental condition requires a deeper look into how the brain processes language, auditory feedback, and motor control. This research introduces an integrated, AI-driven pipeline that combines neuroimaging, professional observations, psychological and physiological factors, linguistic data, and machine learning to identify speech-related abnormalities and uncover neural patterns and trends associated with stammering and other neurodevelopmental speech conditions.

A Multimodal Approach to Speech and Brain Pattern Analysis

This section is presented in a simple and easy-to-understand way for a general audience. More detailed and technical information can be found in the my technical research papers.

1 : Input Layer : Multimodal Brain and Behavioural Data

The process begins with capturing rich, diverse datasets across multiple modalities:

- MRI & fMRI Scans : Capture structural and functional brain activity (MRI, fMRI, T1, T2, DWI etc.)

- Professional Observation : Clinical notes and subjective speech assessments.

- Other Imaging Reports : Includes Diffusion Tensor Imaging (DTE), EEG to provide deeper insights into brain connectivity, electrical activity, and metabolic function.

- Psychological, physiological and lifestyle factors.

2 : Image and Text Preprocessing

Before feeding data into AI models, both image and text inputs undergo extensive preprocessing:

Image Preprocessing

- Denoising & Segmentation: Enhancing image clarity and isolating relevant brain regions (ROI).

- Feature Extraction: Identifying spatial and temporal brain features linked to speech and motor control.

Text Preprocessing

- NER (Named Entity Recognition), keyword extraction, and summarization of clinical observations.

- Dataset Generation: Includes noise reduction, tokenization, and proper labelling to build high-quality training and testing datasets.

3 : Model Training & Inference

Multiple deep learning models are trained for different purposes using pre-processed datasets:

- Model 1 (Image + NLP Segmentation): Combines imaging and text data to segment regions of interest in the brain and correlate them with speech behaviour.

- Model 2 (Predictive Analysis): Forecasts speech disfluency episodes or risk levels based on neural patterns and historical data.

- Model X (RNNs and GNNs): Uses Recurrent Neural Networks for sequential speech data and Graph Neural Networks for modelling brain connectivity.

- And many more layers based on a factor library and phases of prediction. It is a multimodal, hierarchical permutation-combination-based output.

4 : Output : Actionable Insights

The trained models yield several high-value outcomes:

- Numerical Scores and Structured Data Points: Quantifies neural activation and speech metrics.

- Abnormality Detection: Flags deviations in speech patterns or brain signals.

- Common Patterns and Risk Scores: Identifies recurring trends across individuals, aiding in segmentation of speech conditions and early diagnosis.

We’ve kept the information on this platform simple and easy to understand for general users. For those interested in more detailed insights, you’re welcome to explore our technical papers.

Neuro-AI Analytics Factor Library

Usage of Multiple Brain Imaging Techniques

No Data Found

- Multiple Brain Imaging Modalities

- Professional Observation

- levels of stammering

- Other Neurodevelopmental Conditions, Physical Brain Damage & Injury

- Psychological & Personality Factors

- Various Individual Factors : Age Group, Gender, Professions, Background and Lifestyle, etc.

Considering all different modalities and other psychological or individual user factors , we trained the model with approximately 2,500 features per subject along with their permutations and combinations.

Analysis and Applications of Multiple Brain Modalities

Below are the key brain imaging and recording techniques used in our research, each offering unique insights into speech and neural processes. Structural scans like T1 MRI reveal anatomical differences, while fMRI maps functional activity during speech. DTI uncovers connectivity between brain regions, and EEG/MEG provide precise timing and location of neural activity. PET imaging assesses metabolic and neurotransmitter functions, completing a multimodal view of the brain for comprehensive analysis.

T1 MRI (Structural MRI)

- What it shows : Brain anatomy and structure.

- Use in stammering Analysis :

- Detects structural differences in regions linked to speech production and motor control.

- Detect Brain Asymmetry

- Track Developmental Changes

- Identify biomakers of Persistent vs. Recovered Stammering

fMRI (Functional MRI)

- What it shows : Brain activities.

- Use in stammering Analysis :

- Identifies which brain regions activate during speech.

- Shows overactivation or under activation in speech-related networks.

- Reveals timing and coordination issues in motor-speech planning.

DTI (Diffusion Tensor Imaging)

- What it shows : White matter tracts and connectivity.

- Use in stammering Analysis :

- Measures integrity of connections between speech-related areas.

- Studies have shown disrupted white matter tracts in people who stammer.

- Useful for exploring neural communication inefficiencies.

EEG (Electroencephalography)

- What it shows : Real-time electrical activity of the brain.

- Use in stammering Analysis :

- Offers millisecond-level temporal resolution.

- Useful for studying timing of neural signals during speech.

- Can capture speech preparation and auditory feedback responses.

Features per ROI

Loading..........

The Data is Not Available

Stammering is more than just a speech disruption – It’s a complex neurodevelopmental condition with consistent brain-level signatures. Leveraging our Neuro-AI pipeline, we applied deep learning-based segmentation, pattern clustering, and temporal trend analysis on brain imaging and behavioral datasets across cohorts Classified by stammering intensity, psychological profiles, and associated neurodevelopmental traits. Here, we reveal key findings and trends that emerged from our large-scale analysis.

You can report directly via our Contact Form or email us at below emails.

You can report directly via our Contact Form or email us at below emails.